Handling Pipelines in Data Science with Jenkins, Ansible, and GitHub Actions: A Complete Guide

admin

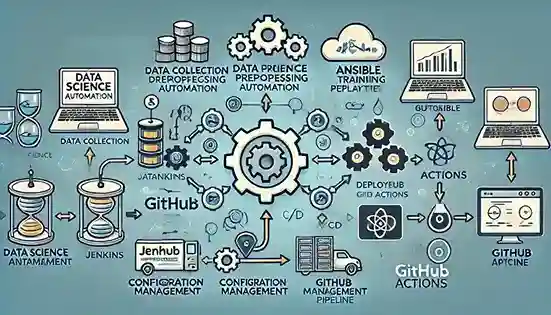

Overview of a data science pipeline with Jenkins, Ansible, and GitHub Actions

Handling Pipelines in Data Science with Jenkins, Ansible, and GitHub Actions: A complete Guide

Introduction

Data science projects involve multiple steps, from data collection and preprocessing to model training and deployment. Managing these workflows manually can be time-consuming and error-prone. Automation tools like Jenkins, Ansible, and GitHub Actions can streamline and optimize data science pipelines, ensuring efficiency, reliability, and scalability.

In this article, we will explore how these tools can be used to handle data science pipelines effectively.

Why Automate Data Science Pipelines?

Automation in data science offers several benefits:

- Consistency: Ensures standardized processes for data ingestion, processing, model training, and deployment.

- Efficiency: Reduces manual intervention and speeds up workflows.

- Scalability: Supports large datasets and complex models with ease.

- Reproducibility: Makes experiments and results easier to track and replicate.

Using Jenkins for Data Science Pipelines

Jenkins is a widely used open-source automation server that supports continuous integration and continuous deployment (CI/CD). It helps in automating tasks such as data extraction, transformation, model training, and deployment.

Steps to Set Up a Data Science Pipeline in Jenkins

-

Install and Configure Jenkins:

- Download and install Jenkins.

- Install necessary plugins like Git, Docker, and Python.

-

Create a Jenkins Job:

-

Define the pipeline stages using a

Jenkinsfile. -

Example stages:

pipeline { agent any stages { stage('Clone Repository') { steps { git 'https://github.com/your-repo.git' } } stage('Data Preprocessing') { steps { sh 'python preprocess.py' } } stage('Model Training') { steps { sh 'python train.py' } } stage('Deployment') { steps { sh 'python deploy.py' } } } }

-

Define the pipeline stages using a

-

Schedule and Monitor Jobs:

- Use Jenkins’ built-in scheduling and logging for automation and monitoring.

Using Ansible for Data Science Pipelines

Ansible is an automation tool used for configuration management and deployment.

Steps to Use Ansible in Data Science

-

Install Ansible:

sudo apt update && sudo apt install ansible -y -

Create an Ansible Playbook:

-

Example

deploy.ymlplaybook for model deployment:- hosts: all tasks: - name: Ensure dependencies are installed apt: name: python3-pip state: present - name: Deploy the model command: python deploy.py

-

Example

-

Run the Playbook:

ansible-playbook -i inventory deploy.yml

Using GitHub Actions for Data Science Pipelines

GitHub Actions allows automation directly within GitHub repositories.

Steps to Set Up a Data Science Pipeline in GitHub Actions

-

Create a

.github/workflows/pipeline.ymlfile:name: Data Science Pipeline on: [push] jobs: build: runs-on: ubuntu-latest steps: - name: Checkout Repository uses: actions/checkout@v2 - name: Set up Python uses: actions/setup-python@v3 with: python-version: '3.8' - name: Install Dependencies run: pip install -r requirements.txt - name: Run Data Preprocessing run: python preprocess.py - name: Train Model run: python train.py - name: Deploy Model run: python deploy.py -

Push the workflow file to GitHub:

- GitHub Actions will automatically trigger the pipeline on new commits.

Choosing the Right Tool

| Feature | Jenkins | Ansible | GitHub Actions |

|---|---|---|---|

| CI/CD | ✅ | ❌ | ✅ |

| Configuration Management | ❌ | ✅ | ❌ |

| Cloud-Native Support | ❌ | ✅ | ✅ |

| Ease of Use | Moderate | High | High |

| Integration | Extensive | Moderate | Strong with GitHub |

Conclusion

Jenkins, Ansible, and GitHub Actions each offer unique advantages for handling data science pipelines. Jenkins is ideal for end-to-end CI/CD automation, Ansible excels in configuration management, and GitHub Actions integrates seamlessly with GitHub repositories.

Choosing the right tool depends on your project’s needs. For fully automated workflows, a combination of these tools can create a robust, scalable, and efficient data science pipeline.

Implementing automation in data science not only saves time but also enhances reproducibility and reliability, making it an essential practice for modern data teams.

By leveraging these automation tools, you can streamline your data science workflows and focus on delivering impactful insights rather than dealing with manual processes.

Looking for More?

Stay tuned for more insights on data science, automation, and DevOps best practices on orientalguru.co.in.